Recurrent Neural Network - 6.S191 2020

Table of Contents

- 1. Sequence Modelling

- 2. Sequence Modeling: Design Criteria

- 3. Recurrent Neural Networks for Sequence Modeling

- 4. Backpropagation Through time

- 5. LSTMs

1. Sequence Modelling

1.1. Using a Fixed Window won't work because long term dependencies wont' work

1.2. Use Entire Sequence as Set of Counts - Bag of Words

But they don't preserve order

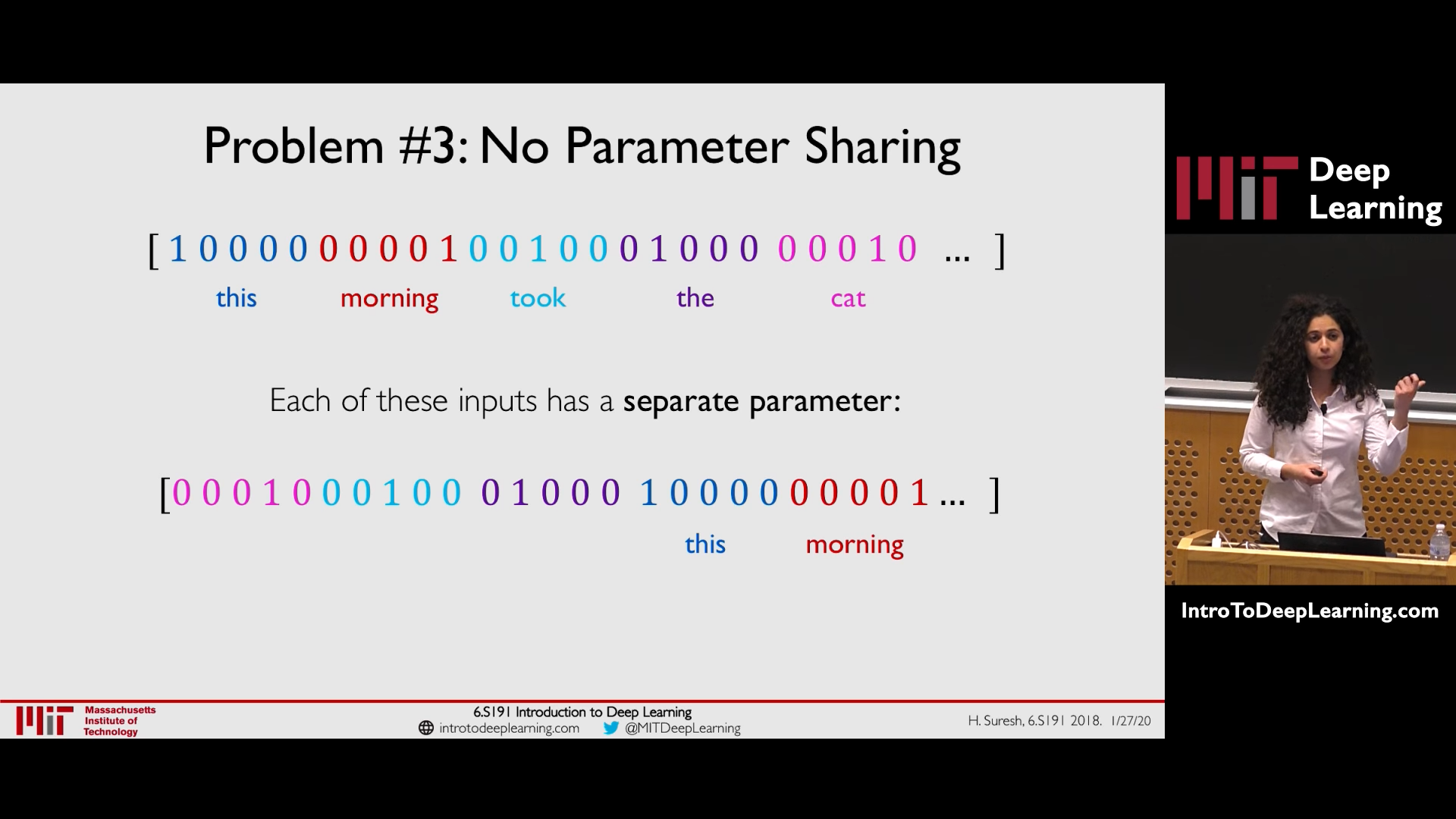

1.3. Use a REALLY Big Fixed Window

Occurence of parameters in diffrent position requirer retraining - sortof

2. Sequence Modeling: Design Criteria

To model sequences, we need to:

- Handle variable-length sequences rq

- Track long-term dependencies RNN

- Maintain information about order

- Share parameters across the sequence

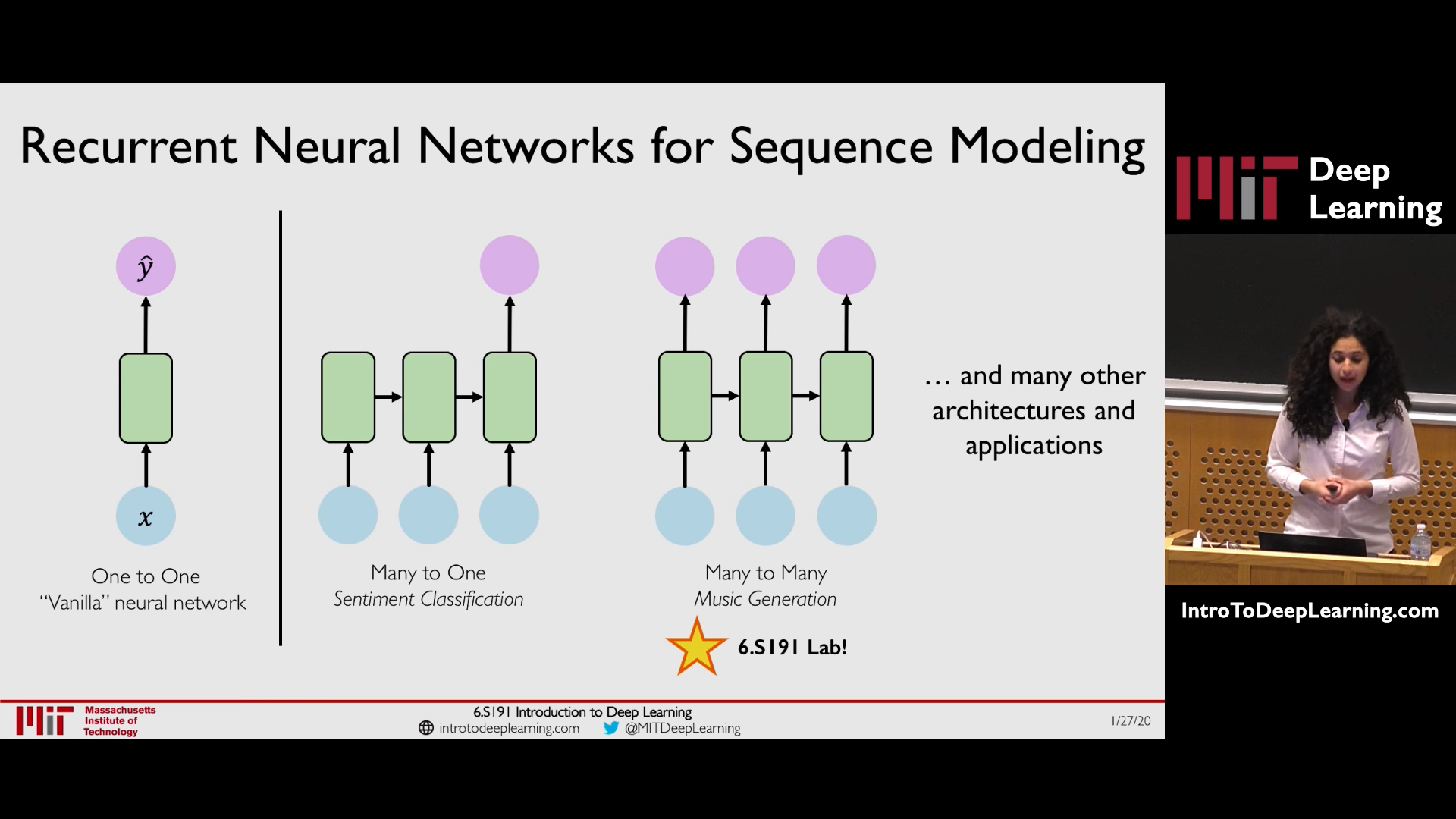

3. Recurrent Neural Networks for Sequence Modeling

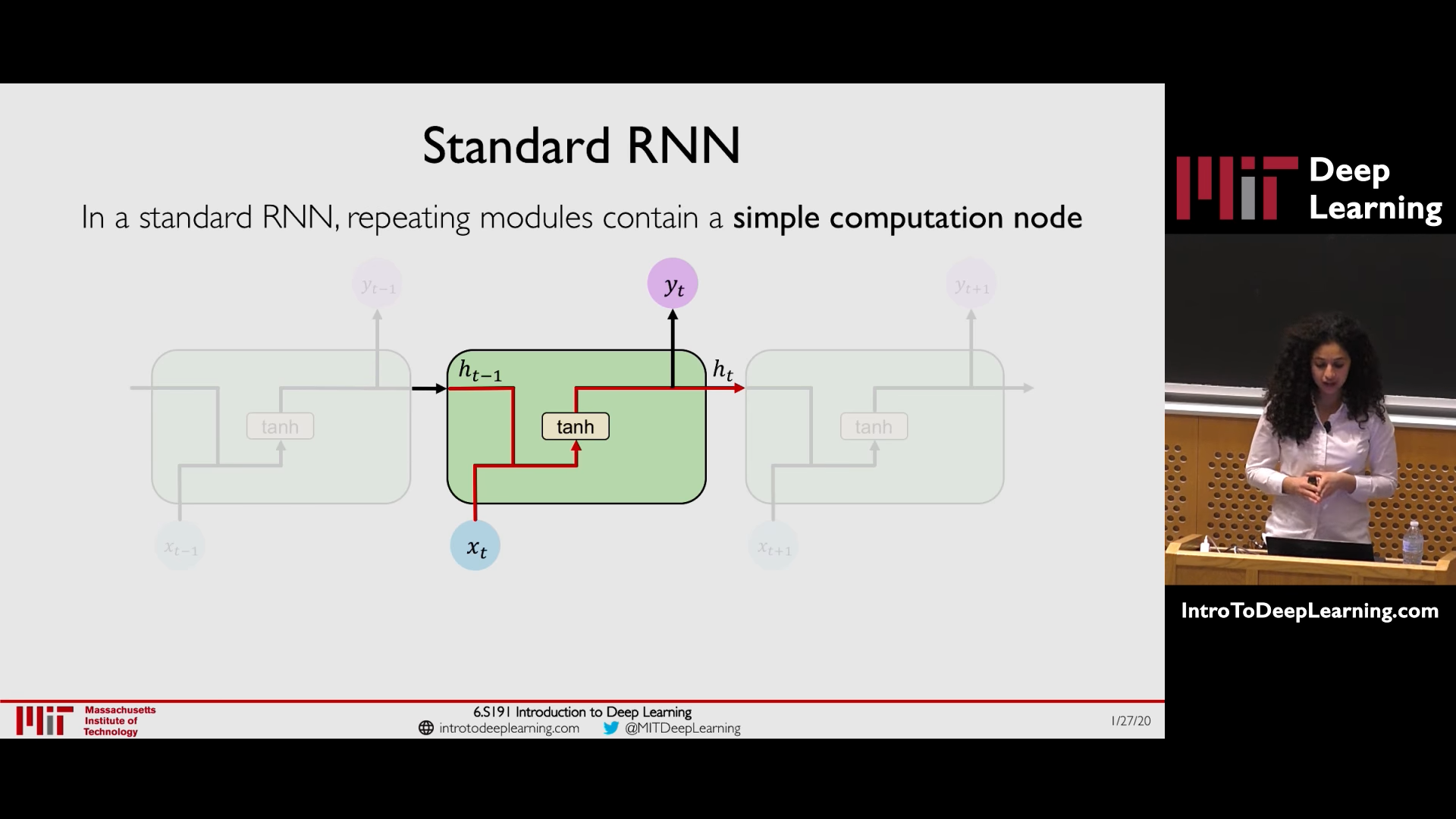

Recurrent because information is being passes within the cell through time

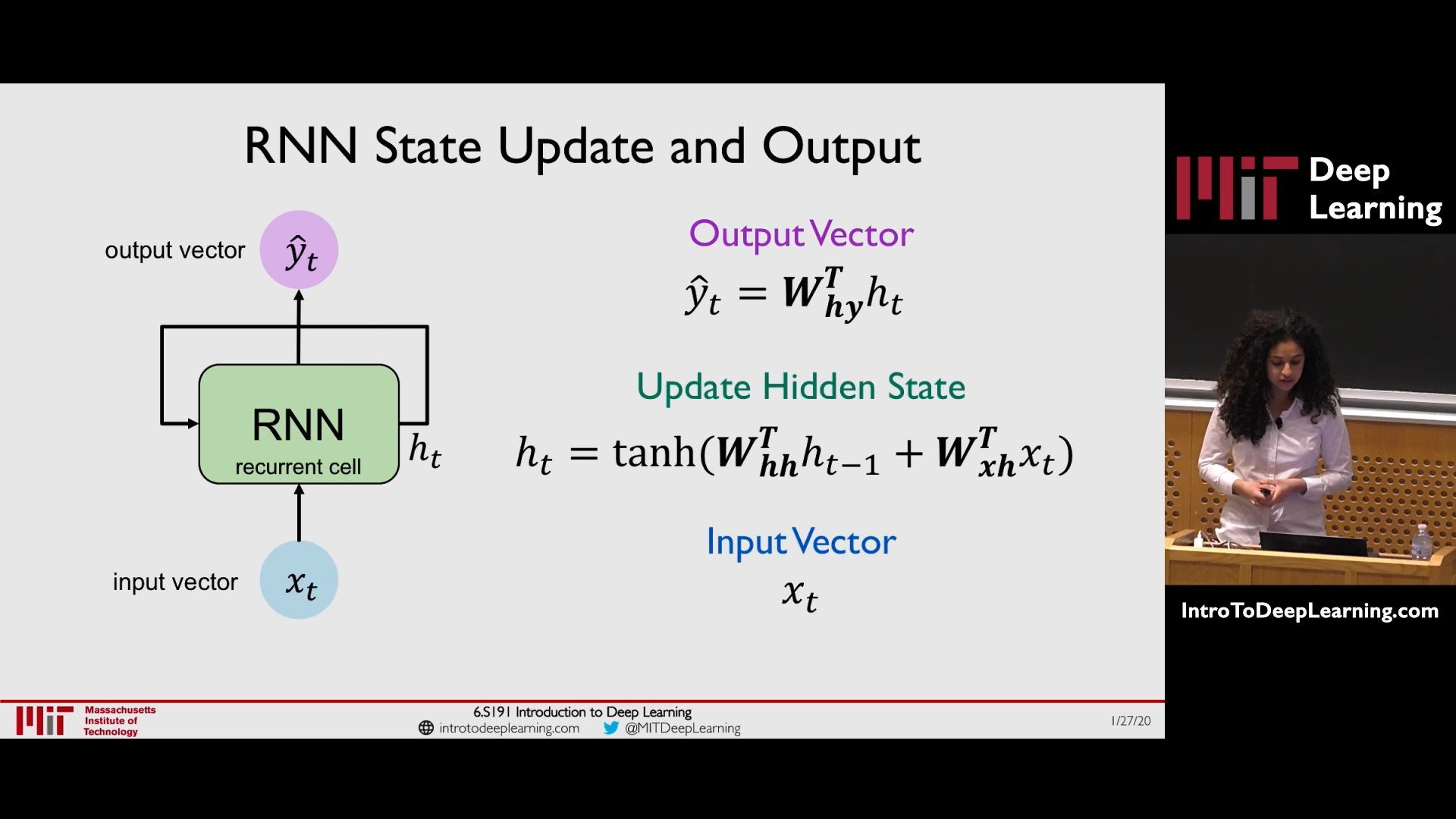

RNN maintain a internal state \(h_t\) which is updated based on previous state and current input \(h_t = f_w(h_{t-1}, x_t)\) and here same set of functions and same set of parameters are used i.e. \(f_w\) is remains same in each time step and it is what we learn

3.1. RNN State Update and Output

There are three weight matrices \(W_{hh}\) , \(W_{xh}\) , \(W_{hy}\)

4. Backpropagation Through time

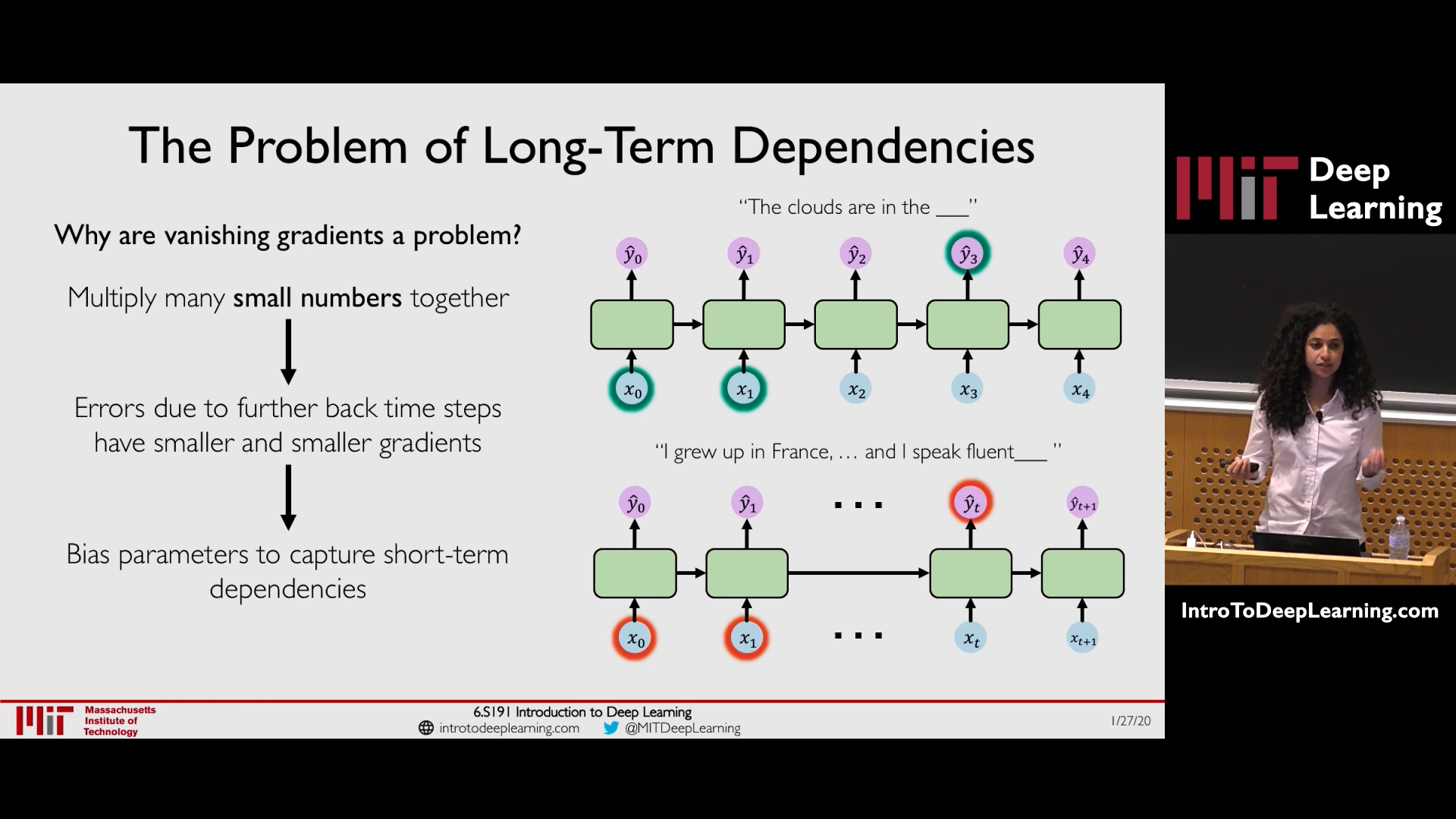

4.1. The Problem of Long-Term Dependencies: Vanishing Gradient

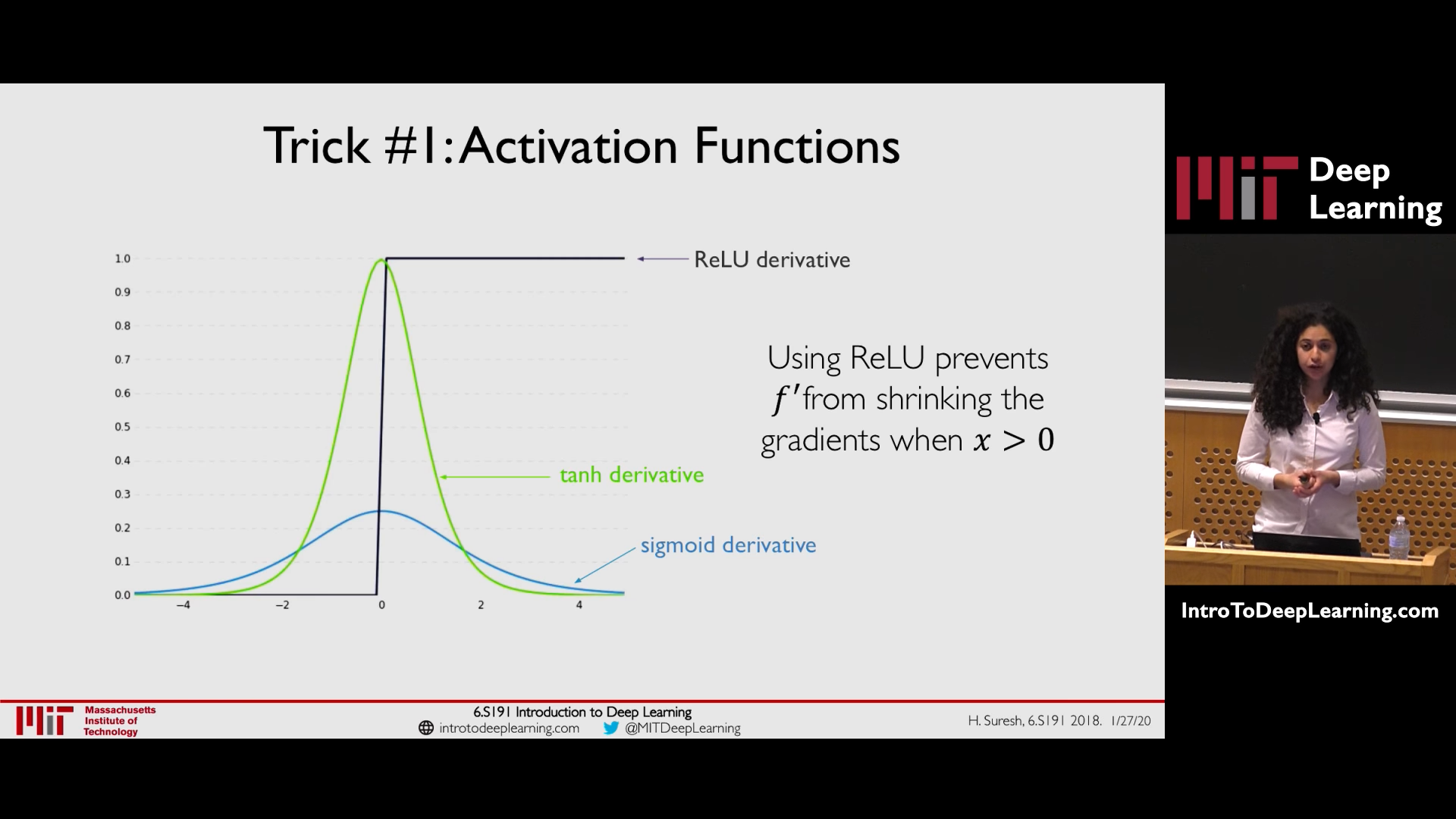

4.1.1. Trick 1: Activation Function - ReLU (has derivative = 1 or 0)

4.1.2. Trick 2: Initialized the weights to identity matrix and Bias to zero

4.1.3. Trick 3: Gated Cells (LSTM, GRU, etc)- Best

Use a more complex recurrent unit with gates to control what information is passed through.

5. LSTMs

Links: LSTM.

5.1. Standard RNN

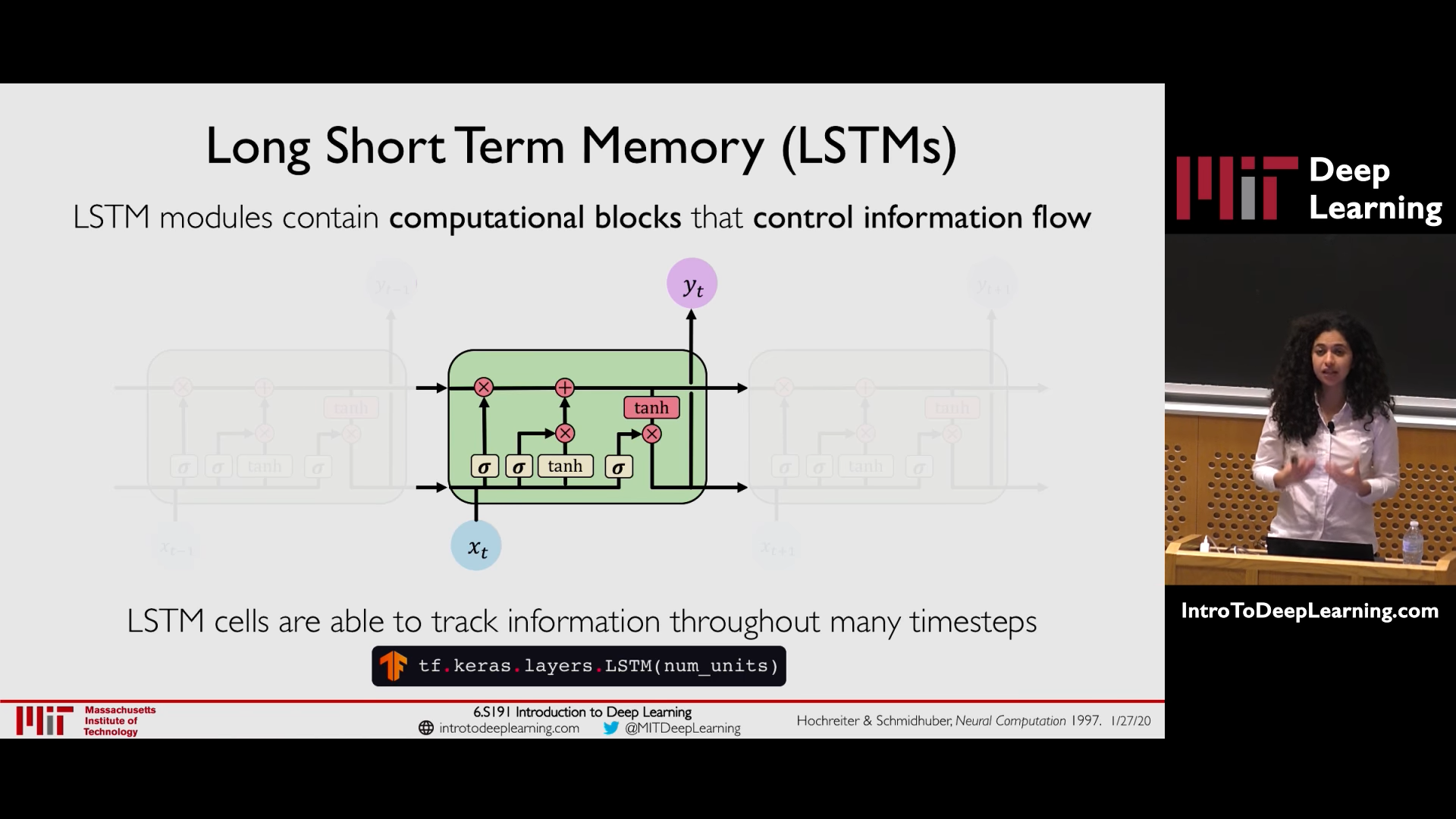

5.2. LSTMs

5.3. Information is added or removed through structures called gates - sigmoid neural net layer and pointwise multiplication

5.4. Forget Store Update Output

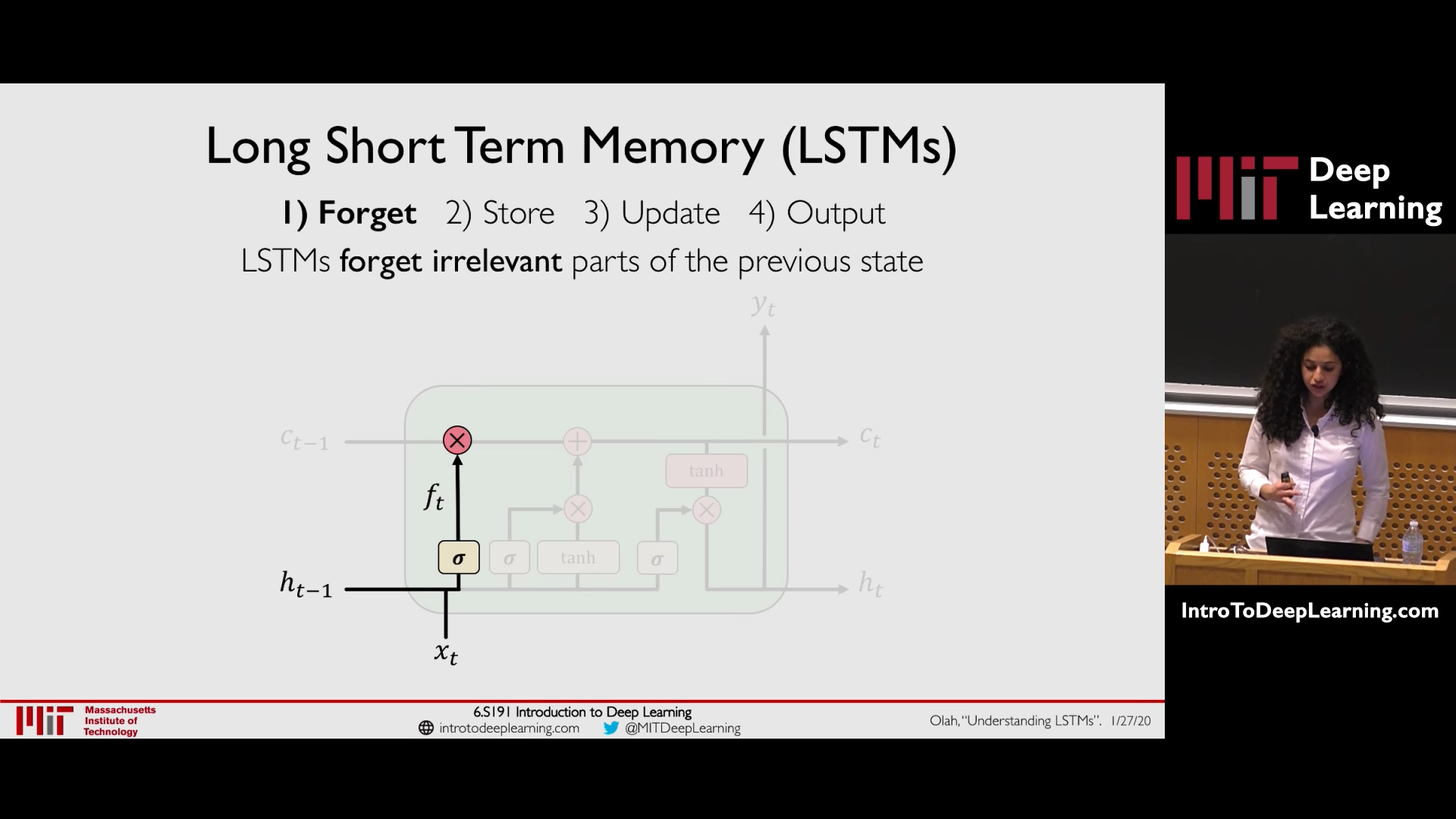

5.4.1. Forget

Decide what information is going to be thrown state depending on \(h_{t-1}\) and input \(x_t\)

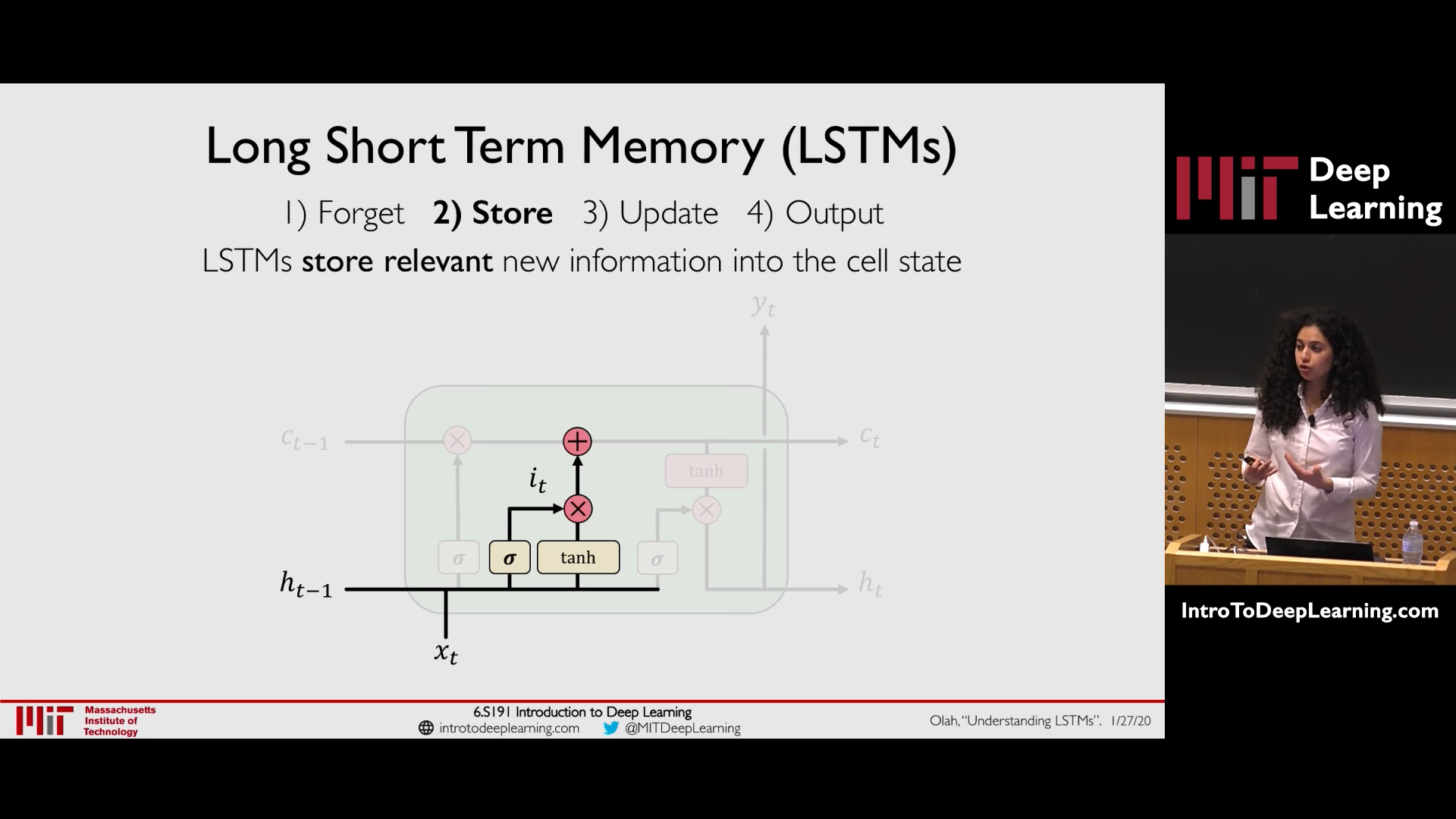

5.4.2. Store

Decide what part of new information is important and store that to cell state

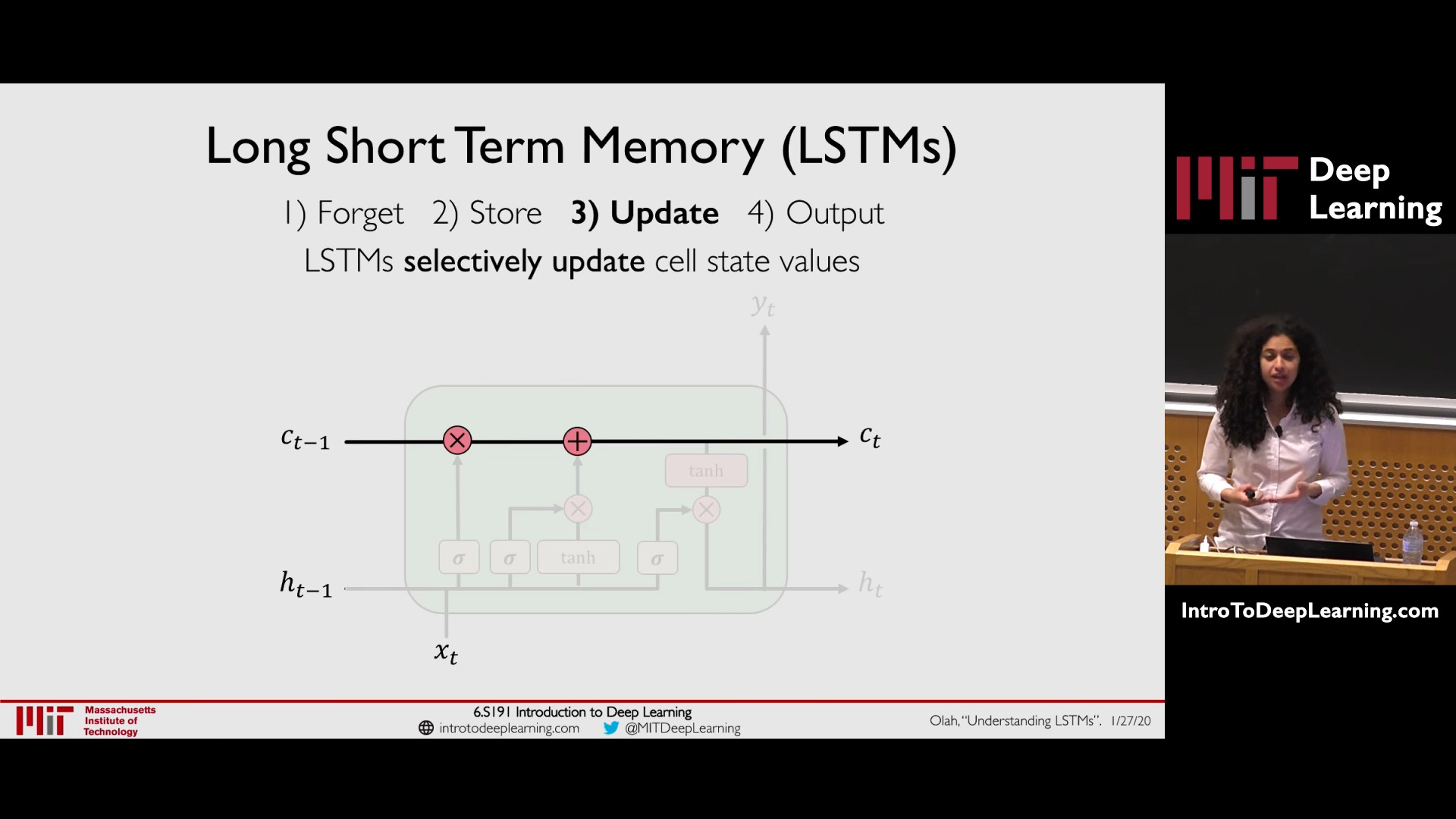

5.4.3. Update

Use the relevant part of prior information and current state to selectively update the cell state values

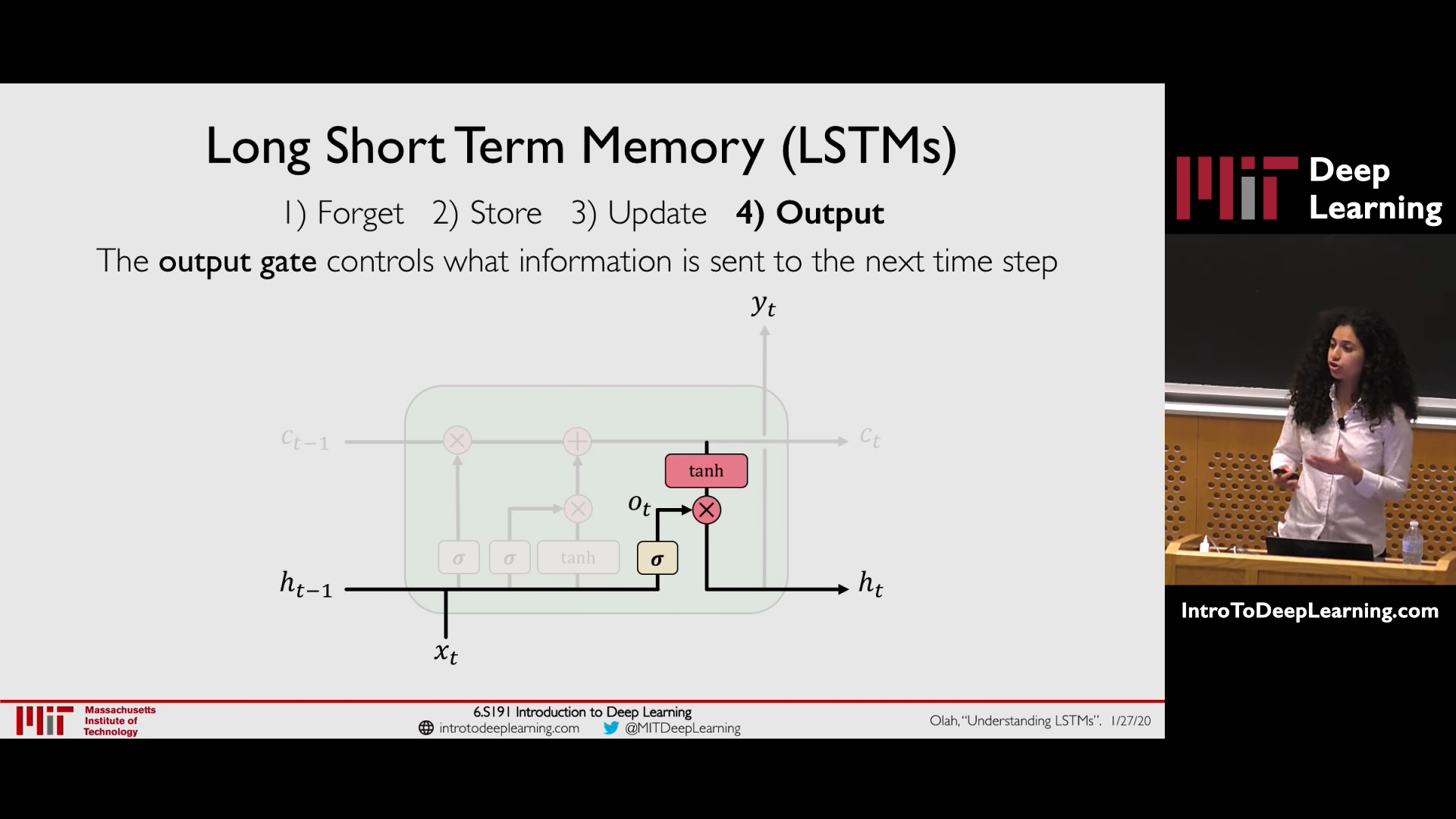

5.4.4. Output

What info stored in cell state is used to compute the hidden state to carry over to next time step

5.5. Uninterrupted flow of gradient throught cell state

5.6. LSTMs: Key Concepts

- Maintain a separate cell state from what is outputted

- Use gates to control the flow of information

- Forget gate gets rid of irrelevant information

- Store relevant information from current input

- Selectively update cell state

- Output gate returns a filtered version of the cell state

- Backpropagation through time with uninterrupted gradient flow