LSTM

Table of Contents

See: Recurrent Neural Network - MIT 6.S191 2020, RNN and Transformers (MIT 6.S191 2022) for links to lecture video.

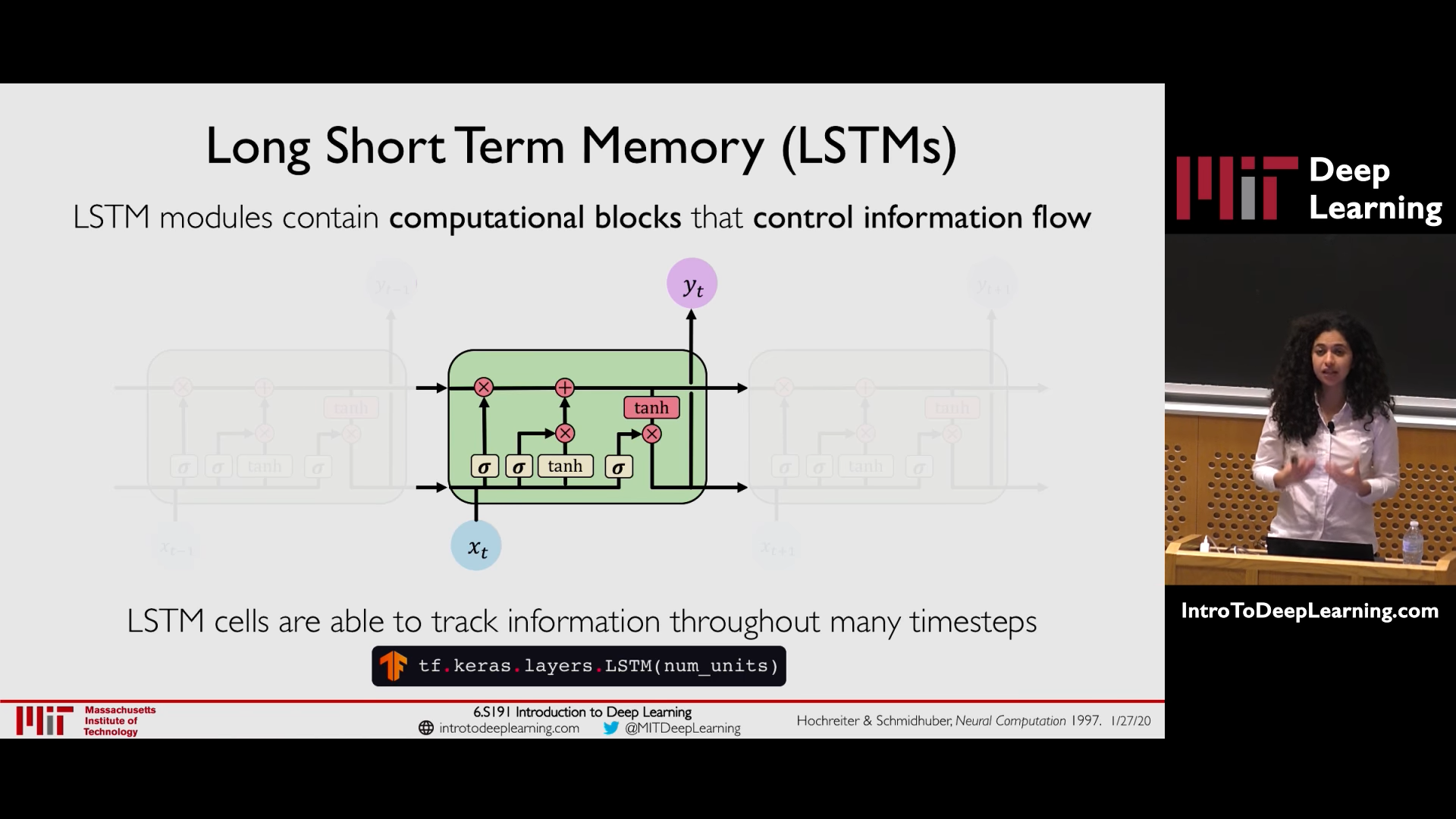

Key Concepts:

- Maintain a separate cell state from what is outputted

- Use gates to control the flow of information

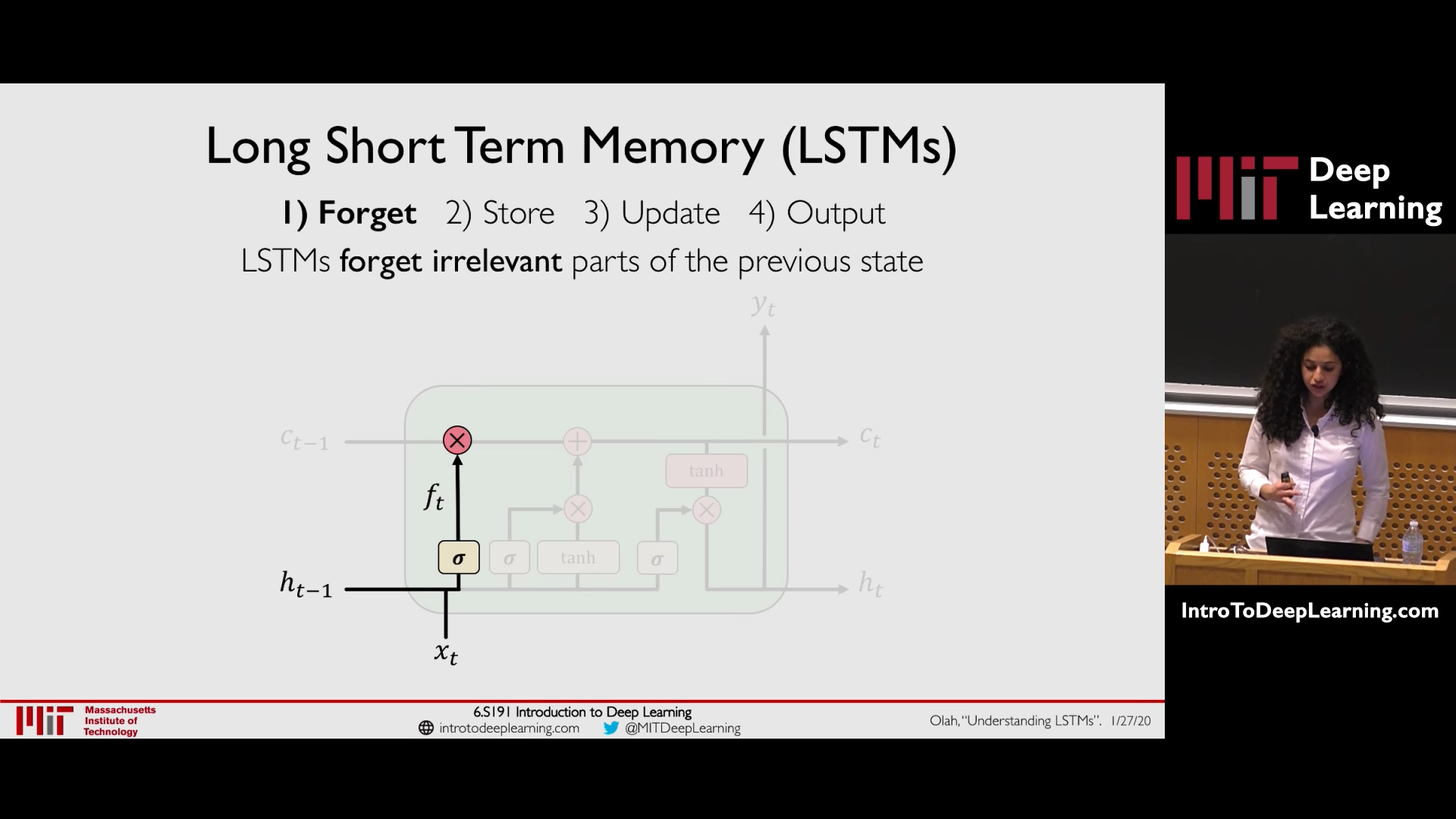

- Forget gate gets rid of irrelevant information

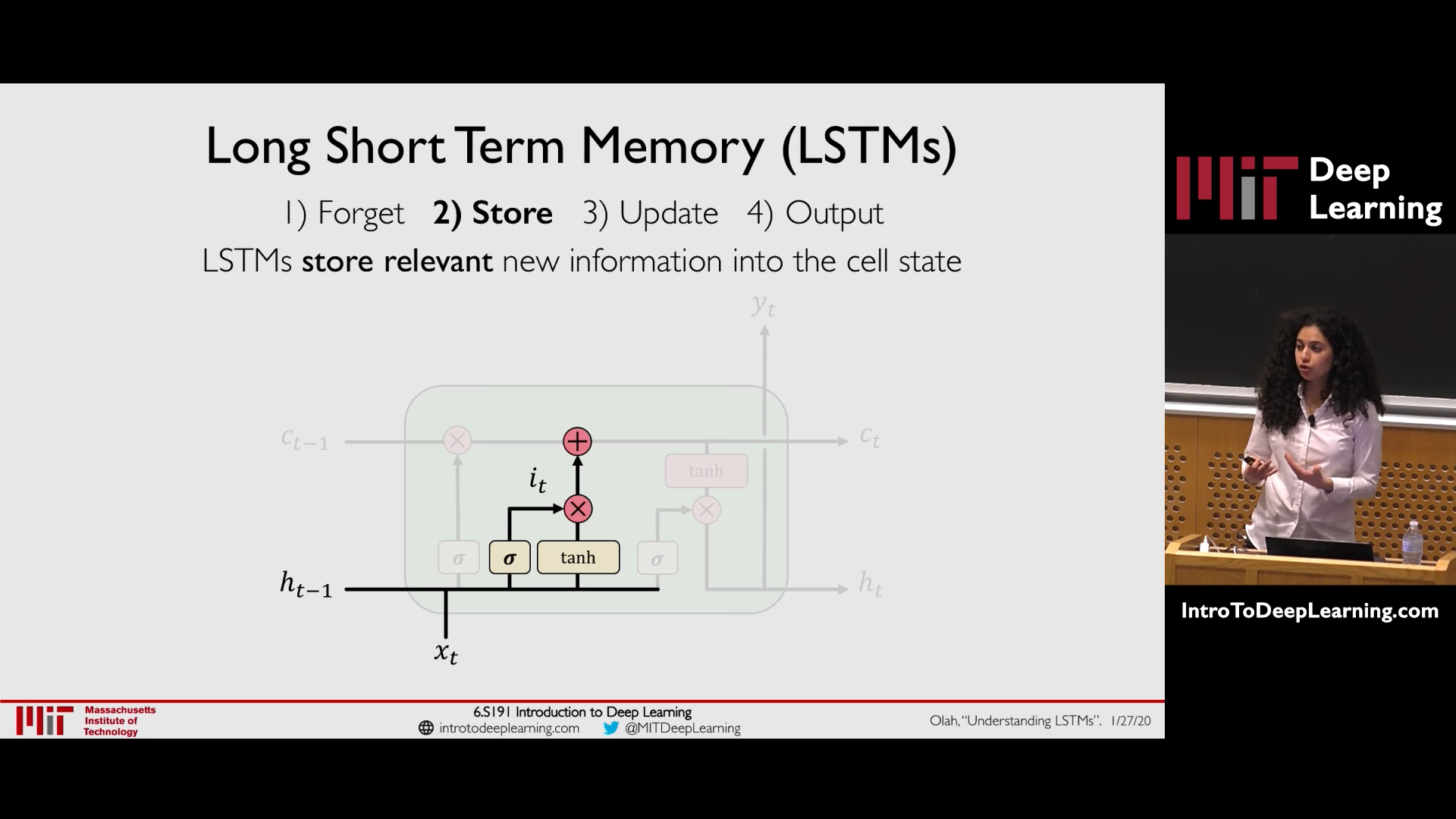

- Store relevant information from current input

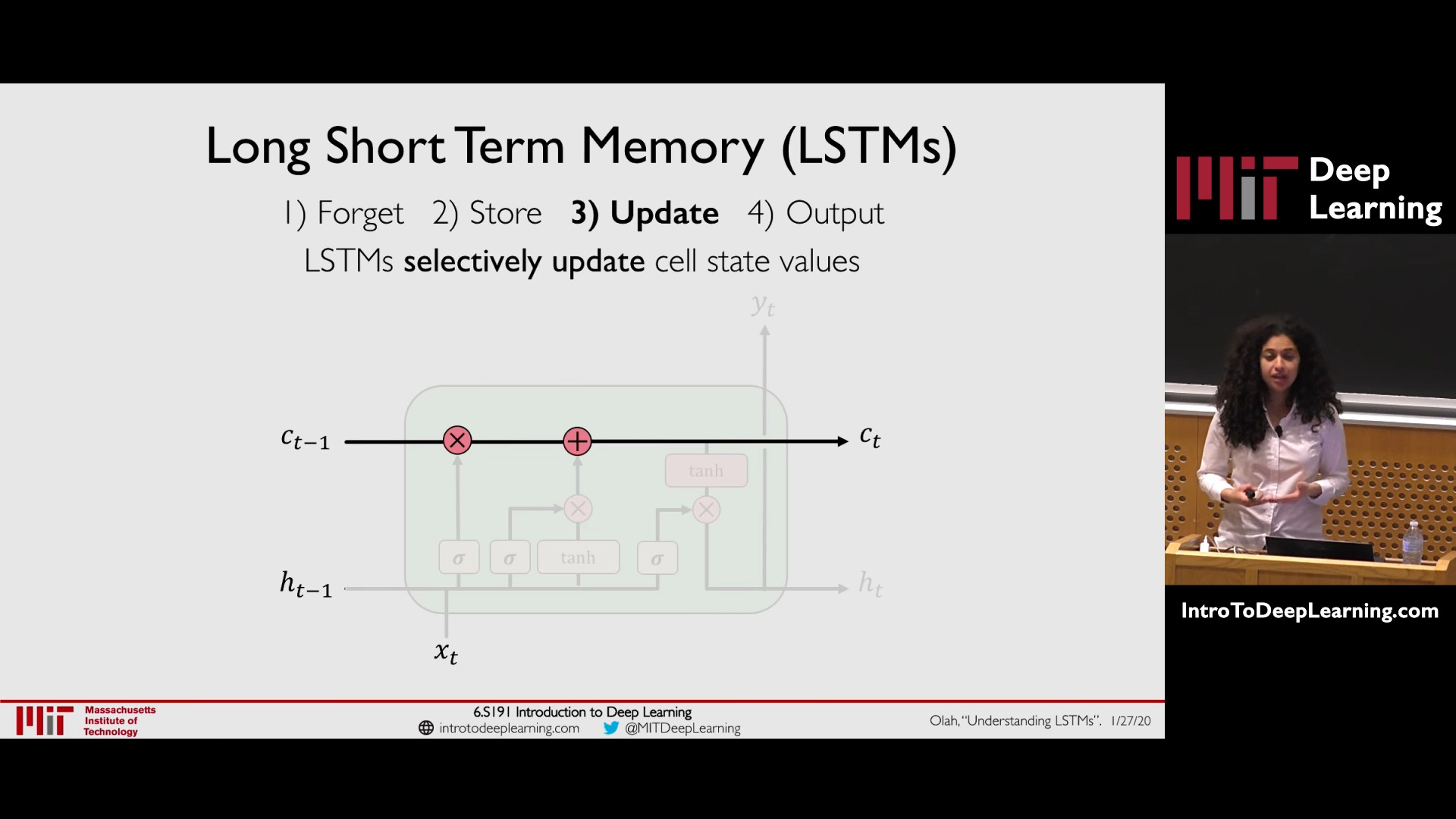

- Selectively update cell state

- Output gate returns a filtered version of the cell state

- Backpropagation through time with uninterrupted gradient flow

Training Recurrent Nets is Optimization Over Programs#+CAPTION: Uninterrupted flow of gradient throught cell state

1. History of LSTM

Long short-term memory (LSTM) networks were invented by Hochreiter and Schmidhuber in 1997 and set accuracy records in multiple applications domains.

LSTM broke records for improved machine translation, Language Modeling and Multilingual Language Processing. LSTM combined with convolutional neural networks (CNNs) improved automatic image captioning.

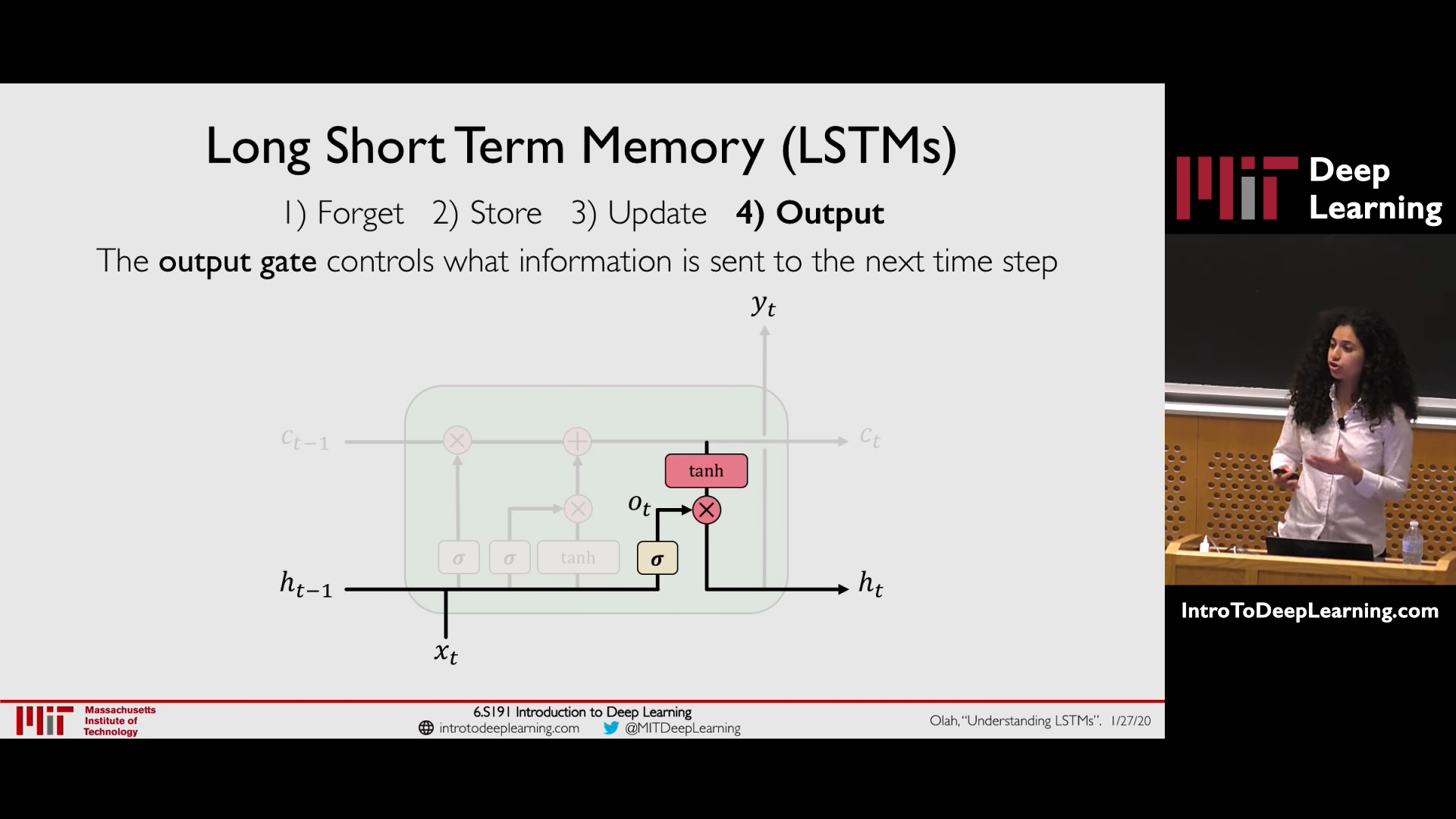

2. Forget Store Update Output

2.1. Forget

Decide what information is going to be thrown state depending on \(h_{t-1}\) and input \(x_t\)

2.2. Store

Decide what part of new information is important and store that to cell state

2.3. Update

Use the relevant part of prior information and current state to selectively update the cell state values

2.4. Output

What info stored in cell state is used to compute the hidden state to carry over to next time step

3. Links

- Handle sequence of different lengths for batch processing: https://stackoverflow.com/questions/49466894/how-to-correctly-give-inputs-to-embedding-lstm-and-linear-layers-in-pytorch/49473068#49473068